An interesting competition on choice engineering is being held here. The goal is to compare qualitative (choice architecture) and quantitative (new field of choice engineering) models of choice and determine which one works best in influencing human decisions.

The competition was recently described in Nature Communications [1].

Choice architecture uses qualitative psychological principles in choice design. The recent development of quantitative models of choice can revolutionize this discipline. To launch this field, which we term choice engineering, we initiate a large-scale competition in which the effectiveness of qualitative principles and quantitative models will be compared.

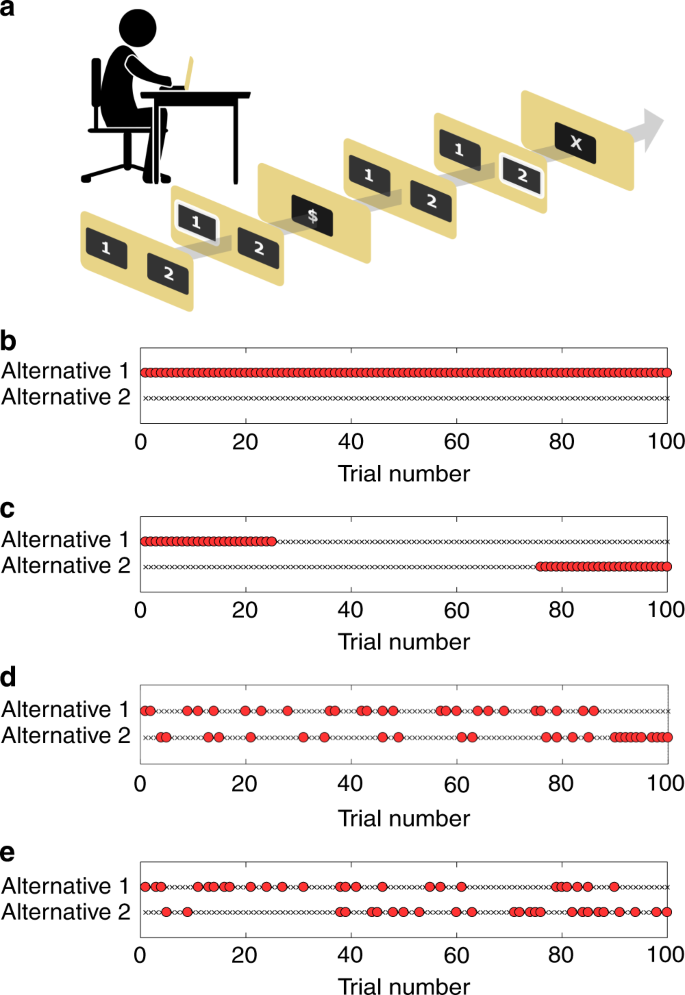

Fig. 1 shows different optimal strategies of allocating rewards to bias choice to maximize rewards in a repeated two-alternative forced-choice task (a). If one could allocate all rewards to a preferred alternative (alternative 1 in Fig.1), applying Thorndike’s Law of Effect would result in an allocation shown in Fig1.b. However, when the rewards need to be allocated equally to two alternatives, the “Law of Effect is not specific enough to prescribe the optimal allocation of rewards“. One may then employ c) choice architecture that draws from various psychological principles (like the primacy effect), or d,e) various quantitative models. The quantitative models may dynamically compute the expected reward based on past experience, i.e., they predict future choices that maximize the expected reward based on previously made choices.

The question is which models work best in engineering human behavior and this is what the competition is really about.

[Participants need to] propose a reward schedule that maximally biases the choices of human subjects in a repeated, two-alternative, forced-choice experiment, which we denote as a session. A session consists of 100 trials. In each trial, a reward may be assigned to one, two or none of the alternatives, complying with the global constraint of assigning a reward to exactly one-quarter of the trials (25 trials) of each alternative (as in Fig. 1c–e).

[1]

(Recently, we have described a very similar concept where machine learning would augment human decision making to maximally bias (nudge) a population to follow personalized interventions [3]. Comments welcome!)

[1] Ohad Dan & Yonatan Loewenstein, From choice architecture to choice engineering, Nature Communications, 10, 2808 (2019).

[2] Thorndike, E. L. The law of effect. Am. J. Psychol. 39, 212 (1927).

[3] Emir Hrnjic, Nikodem Tomczak, Machine learning and behavioral economics for personalized choice architecture, (2019) arXiv:1907.02100.

Leave a comment